Dear readers,

This is my last observation for this year.

I took data on the heights (in nearest cm) of plant stems, whether there were flower buds, and the milkiness of the leaves (1=none, 2=little, 3=moderate, 4=excessive) by cutting the leaves. Bitter the leaves, having more milkiness on the leaves.

Growing three lettuce populations in the hydroponics lab under professor Michaels at the University of Minnesota

Friday, December 19, 2014

Thursday, December 11, 2014

Twelfth Week: Observation

Dear readers,

Today, I continued to take data on whether each plant is bolting and if it is, whether it has flower buds or not.

Except for few, almost all of the plants were bolting. And the two from Freedom's Mix had already flower buds. (Which is not a good thing since we want to breed plants that will bolt as late as possible.)

Today, I continued to take data on whether each plant is bolting and if it is, whether it has flower buds or not.

Except for few, almost all of the plants were bolting. And the two from Freedom's Mix had already flower buds. (Which is not a good thing since we want to breed plants that will bolt as late as possible.)

Thursday, December 4, 2014

Eleventh Week: Observation

Dear readers,

We stopped harvesting since last week because some of the plants already existed their vegetative stage. (One even had flower buds already.)

Instead, today I took data on whether plants were bolting or not and the severity of mildew damage (1-slight damage, 2-moderate damage, 3-severe damage) on each plant.

I note a plant as bolting, if I could see the stem between its leaves.

All of the plants were suffering from slight to severe mildew damage. (If this happens, you should try to clean up the bottom leaves so that you can avoid further infection.)

We stopped harvesting since last week because some of the plants already existed their vegetative stage. (One even had flower buds already.)

Instead, today I took data on whether plants were bolting or not and the severity of mildew damage (1-slight damage, 2-moderate damage, 3-severe damage) on each plant.

I note a plant as bolting, if I could see the stem between its leaves.

All of the plants were suffering from slight to severe mildew damage. (If this happens, you should try to clean up the bottom leaves so that you can avoid further infection.)

Thursday, November 20, 2014

Tenth Week: Observation 6

Dear readers,

Today, we continued to take data on the total weight of the larger leaves (but leaving three growing size leaves behind for next harvest).

Today, we continued to take data on the total weight of the larger leaves (but leaving three growing size leaves behind for next harvest).

Some of the plants were not dead, but growing too slow so we could not harvest from those smaller plants.

Thursday, November 13, 2014

Ninth Week: Observation 5

Dear readers,

Today, we took some data on tastes along with the total weight of the bigger leaves (leaving three growing size leaves behind).

Mainly, the leaves from Freedom's Mix were the sweetest. However, ones from Philosopher's Mix had thicker and rougher leaves with sweet and nutty taste.

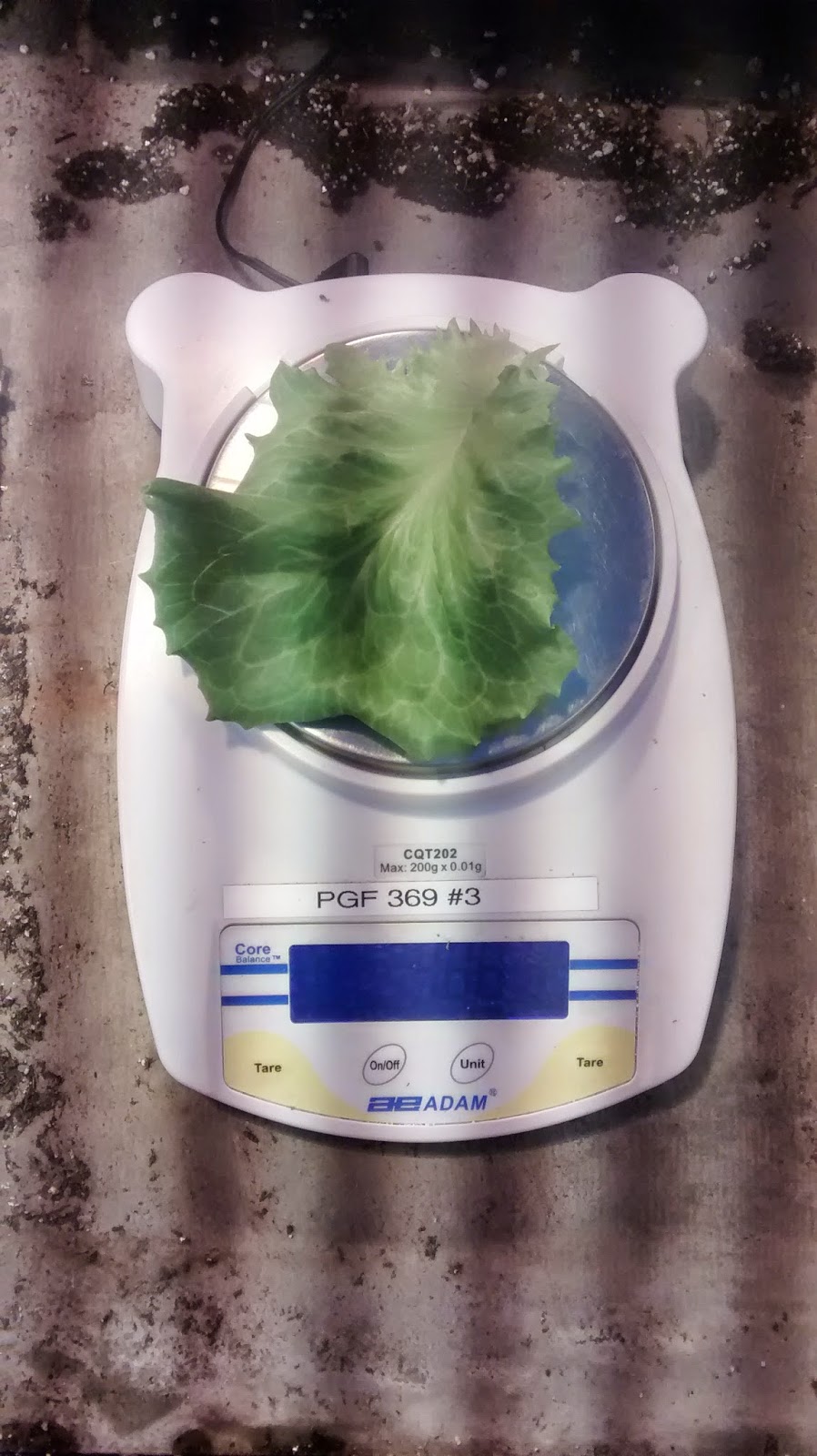

And, the leaves grow this big!

Today, we took some data on tastes along with the total weight of the bigger leaves (leaving three growing size leaves behind).

Mainly, the leaves from Freedom's Mix were the sweetest. However, ones from Philosopher's Mix had thicker and rougher leaves with sweet and nutty taste.

And, the leaves grow this big!

Eighth Week: Observation 4

Dear readers,

For this week's data, for each population, we took it on different days. (However, this will not affect our project's result because we are only comparing plants among each population.)

We took data on total weight of all the harvested leaves from each plant. (We harvested all the large leaves from each but leaving at least three smaller leaves that will grow larger for the next week's harvest.)

For this week's data, for each population, we took it on different days. (However, this will not affect our project's result because we are only comparing plants among each population.)

We took data on total weight of all the harvested leaves from each plant. (We harvested all the large leaves from each but leaving at least three smaller leaves that will grow larger for the next week's harvest.)

Thursday, October 30, 2014

Seventh Week: Observation 3

Dear Readers,

Today, I collected data on colors, shapes, speckles, and the weights of four largest leaves from each plants.

Today, I collected data on colors, shapes, speckles, and the weights of four largest leaves from each plants.

One concern was raised by professor Michaels that the plants from Morton's Mix are having tip burning from lack of calcium since they cannot absorb it fast enough.

Also, two plants from Morton's Mix, one plant from Freedom's Mix were diseased and two plants from Morton's Mix were dead already. However, all of the roots looked pretty healthy. (Professor Michaels called this bridging.)

Thursday, October 23, 2014

Sixth Week: Data and Observation 2

Dear readers,

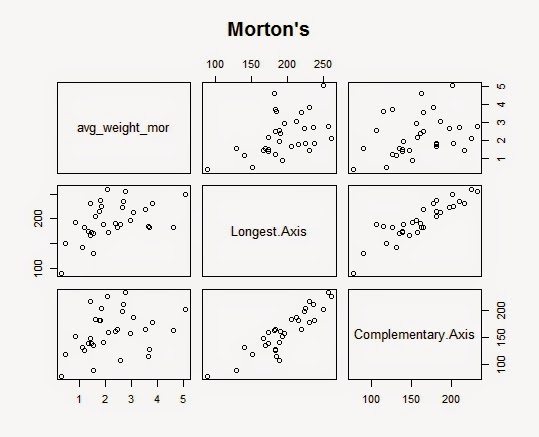

Today, I took some data on the length of the longest axis (mm) of the leaves and the length of the complementary axis (mm) of the leaves, so that I can measure (by a ruler) physical growth of the leaves.

Besides physical growth, I also measured the weight of two largest leaves (in grams) by harvesting (with scissors) two leaves from each plant.

For fitting the regression line, I took the average of the weights of two leaves. I wanted to know whether there is correlation between the weight of the two largest leaves and length of the axis.

In Morton's Mix, we see a very strong correlation between the weight and the longest axis and also between the two axises. However, a relationship between the weight and the complementary axis looks mild. So, let's check the summary table for regression line of Morton's Mix.

Today, I took some data on the length of the longest axis (mm) of the leaves and the length of the complementary axis (mm) of the leaves, so that I can measure (by a ruler) physical growth of the leaves.

Besides physical growth, I also measured the weight of two largest leaves (in grams) by harvesting (with scissors) two leaves from each plant.

For fitting the regression line, I took the average of the weights of two leaves. I wanted to know whether there is correlation between the weight of the two largest leaves and length of the axis.

In Morton's Mix, we see a very strong correlation between the weight and the longest axis and also between the two axises. However, a relationship between the weight and the complementary axis looks mild. So, let's check the summary table for regression line of Morton's Mix.

> summary(mmod) Call: glm(formula = avg_weight_mor ~ Longest.Axis + Complementary.Axis, data = mor) Deviance Residuals: Min 1Q Median 3Q Max -1.4458 -0.5931 -0.1255 0.6400 2.8105 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -0.92087 0.95130 -0.968 0.3410 Longest.Axis 0.02926 0.01047 2.795 0.0091 ** Complementary.Axis -0.01586 0.01023 -1.551 0.1317 --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for gaussian family taken to be 0.9603569) Null deviance: 39.636 on 31 degrees of freedom Residual deviance: 27.850 on 29 degrees of freedom AIC: 94.368 Number of Fisher Scoring iterations: 2

From this we can see that complementary axis does not fit well in this regression line, since the p-value for the hypothesis test (null: Beta3=0, alternative: Beta3 does not equal 0) is greater than the alpha level (0.05).

However, as I mentioned above, it might be possible to see a correlation between the two axises. So, let's try to fit a regression line for them.

> summary(mmod.2)

Call:

glm(formula = Longest.Axis ~ Complementary.Axis, data = mor)

Deviance Residuals:

Min 1Q Median 3Q Max

-33.709 -8.752 -3.709 12.502 39.974

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 55.61310 13.12115 4.238 0.000198 ***

Complementary.Axis 0.87302 0.07999 10.914 5.74e-12 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for gaussian family taken to be 292.1042)

Null deviance: 43557.0 on 31 degrees of freedom

Residual deviance: 8763.1 on 30 degrees of freedom

AIC: 276.41

Number of Fisher Scoring iterations: 2

Here, the result is as we expected. So, instead of treating the two axises as separate variables, let's combine the two and take a mean of it.

> summary(mmod2)

Call:

glm(formula = avg_weight_mor ~ avg_axis_mor, data = mor)

Deviance Residuals:

Min 1Q Median 3Q Max

-1.4144 -0.7500 -0.2386 0.5838 2.4391

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.024277 0.919078 -0.026 0.9791

avg_axis_mor 0.012842 0.005078 2.529 0.0169 *

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for gaussian family taken to be 1.089074)

Null deviance: 39.636 on 31 degrees of freedom

Residual deviance: 32.672 on 30 degrees of freedom

AIC: 97.477

Number of Fisher Scoring iterations: 2

Here, we can clearly see that the weight of the leaves and the axises are correlated and now the variable for axises fits the data well.

Now, let's look at Freedom's Mix.

Here, it looks like all three variables are correlated.

> summary(fmod) Call: glm(formula = avg_weight_free ~ Longest.Axis + Complementary.Axis, data = free) Deviance Residuals: Min 1Q Median 3Q Max -0.8606 -0.4031 -0.1565 0.3417 1.3652 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -1.328302 0.683507 -1.943 0.06173 . Longest.Axis 0.015163 0.004990 3.039 0.00499 ** Complementary.Axis 0.002875 0.003717 0.773 0.44551 --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for gaussian family taken to be 0.3716524) Null deviance: 21.566 on 31 degrees of freedom Residual deviance: 10.778 on 29 degrees of freedom AIC: 63.989 Number of Fisher Scoring iterations: 2

However, we can see that the complementary axis does not fit the data well according to the p-value shown above.

So, let's combine the two axises again and take the mean of it.

> summary(fmod2)

Call:

glm(formula = avg_weight_free ~ avg_axis_free, data = free)

Deviance Residuals:

Min 1Q Median 3Q Max

-0.8537 -0.4079 -0.1950 0.2780 1.2990

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.715077 0.561917 -1.273 0.213

avg_axis_free 0.015976 0.003155 5.064 1.95e-05 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for gaussian family taken to be 0.3875975)

Null deviance: 21.566 on 31 degrees of freedom

Residual deviance: 11.628 on 30 degrees of freedom

AIC: 64.418

Number of Fisher Scoring iterations: 2

As we expected, now the axises fit the data well.

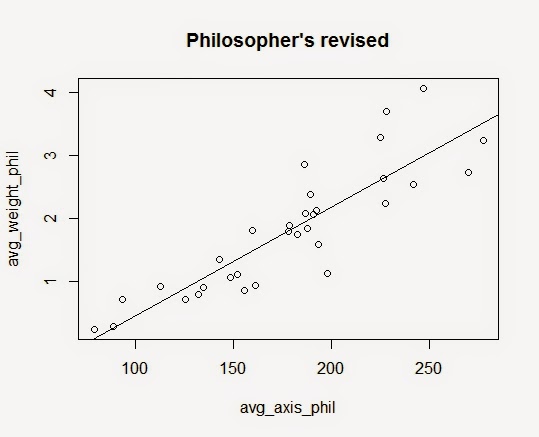

Now, let's look at the plot for Philosopher's Mix.

Here again, it seems like all three variables are strongly correlated to each other.

> summary(pmod)

Call:

glm(formula = avg_weight_phil ~ Longest.Axis + Complementary.Axis,

data = phil)

Deviance Residuals:

Min 1Q Median 3Q Max

-0.99924 -0.29086 -0.00653 0.18672 1.07923

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -1.611472 0.294235 -5.477 6.75e-06 ***

Longest.Axis 0.017902 0.002865 6.248 8.08e-07 ***

Complementary.Axis -0.001399 0.003081 -0.454 0.653

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for gaussian family taken to be 0.17441)

Null deviance: 30.1881 on 31 degrees of freedom

Residual deviance: 5.0579 on 29 degrees of freedom

AIC: 39.779

Number of Fisher Scoring iterations: 2

However, we again see that the complementary axis does not fit the data well. But, we know (I checked) that the two axises are correlated.

So, let's combine the two axises and take the mean of it.

> summary(pmod2)

Call:

glm(formula = avg_weight_phil ~ avg_axis_phil, data = phil)

Deviance Residuals:

Min 1Q Median 3Q Max

-1.0221 -0.2485 -0.0054 0.1687 1.0776

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -1.273861 0.320245 -3.978 0.000406 ***

avg_axis_phil 0.017252 0.001734 9.951 5.14e-11 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for gaussian family taken to be 0.2339939)

Null deviance: 30.1881 on 31 degrees of freedom

Residual deviance: 7.0198 on 30 degrees of freedom

AIC: 48.268

Number of Fisher Scoring iterations: 2

Now, we see that the axises fit the data well.

p.s. the root growth looks pretty good too.

Subscribe to:

Comments (Atom)